LangChain4j: Bringing AI-Powered Language Models to Java

Intro

Artificial Intelligence (AI) is reshaping software engineering, opening vast opportunities in automation, data analysis, and user experience. Integrating AI-powered features, especially within Java applications, has often been challenging — until the arrival of LangChain4j in 2023.

With LangChain4j, Java applications can now leverage advanced Language Learning Models (LLMs), streamlining the development of intelligent, interactive applications.

Created to bridge the gap in AI integration for Java developers, LangChain4j offers a streamlined framework to simplify adding Language Learning Models (LLMs) into Java applications.

LangChain4j’s core features include:

- Unified APIs for smooth integration with various LLMs,

- Comprehensive toolbox packed with features to speed up development,

- Detailed examples to help you start building quickly.

In this article, we’ll explore the basics of LangChain4j to learn how to create a simple chat application using Java and Spring Boot with LangChain4j.

Setting up LangChain4j

To begin, you’ll need to configure dependencies using Maven. We’ll use Google’s Gemini as the LLM model, requiring a dependency to enable this integration. To simplify the development process, we’ll also add high-level AI service dependencies that streamline interactions with the model.

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-google-ai-gemini</artifactId>

<version>0.35.0</version>

</dependency>

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-spring-boot-starter</artifactId>

<version>0.35.0</version>

</dependency>

LangChain4j provides two types of APIs, but here we’ll use ChatLanguageModel — a low-level API that offers full flexibility. You can adjust parameters like creativity, response length, and media type (e.g., text or images if the model supports it). Essential configurations include apiKey and modelName; other settings will default.

To manage model configuration easily, consider setting up the model as a Spring Bean in your Spring Boot application. As we are using Java and Spring Boot it would be nice to configure the model as a Spring Bean.

Tip: Obtain your free Google Gemini API key here: https://ai.google.dev/gemini-api/docs/api-key.

@Bean

ChatLanguageModel geminiAiModel() {

return GoogleAiGeminiChatModel.builder()

.apiKey(apiKey)

.modelName("gemini-1.5-flash")

.build();

}

Memory and HistoryManagement with ChatMemory

One of the most valuable tools LangChain4j provides is the ChatMemory abstraction. While model history logs all messages, ChatMemory helps the model “remember” key conversation parts, giving it contextual awareness in responses.

Key Insight: ChatMemory differs from history. History captures all exchanged messages, whereas ChatMemory selectively retains information to provide context without needing manual maintenance.

@Bean

ChatMemory chatMemory() {

return MessageWindowChatMemory.withMaxMessages(10);

}

Leveraging AI Services for Simplified Development

LangChain4j’s AIService API simplifies development by abstracting low-level model interactions. With AI Services, you can quickly set up input formatting and output parsing for your LLMs. It’s similar to Spring Data JPA: define an interface, and LangChain4j provides an implementation.

For instance, creating a simple chat method is straightforward: define input and output as Strings (or custom objects), and LangChain4j handles the rest. You can even set a system prompt annotation, such as @SystemMessage, to customize model behaviour (e.g., “respond as a pirate”).

Here’s an example of creating a chat assistant interface:

@AiService

public interface Assistant {

@SystemMessage("You are a polite assistant but also a pirate.")

String chat(@UserMessage String userMessage);

}Setting Up WebSocket for Real-Time Communication

To enable real-time communication between your Java Spring Boot backend and frontend, use the WebSocket API. First, add the WebSocket dependency in your project.

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-websocket</artifactId>

</dependency>

Configure WebSocket by annotating your class with EnableWebSocketMessageBroker and implementing WebSocketMessageBrokerComfigurer.In the registerStompEndpoints method, register “/chat” as the STOMP endpoint for communication, and enable SockJS as a fallback option.

If you are hosting frontend and backend on separate origins, remember to configure allowed origins. Then, set up the WebSocket message broker for routing messages in the configureMessageBroker method.

With the configuration ready, create a message-handling controller. Using MessageMapping, specify endpoints for message processing, and with SendToUser, designate the destination for responses.

Finally, integrate your assistant interface, allowing dynamic, user-specific messaging over WebSocket.

@Configuration

@EnableWebSocketMessageBroker

public class WebSocketConfig implements WebSocketMessageBrokerConfigurer {

@Override

public void registerStompEndpoints(StompEndpointRegistry registry) {

registry.addEndpoint("/chat").setAllowedOriginPatterns("*").withSockJS();

}

@Override

public void configureMessageBroker(MessageBrokerRegistry config) {

config.enableSimpleBroker("/queue", "/topic");

config.setApplicationDestinationPrefixes("/app");

}

}

@Controller

public class ChatController {

private final Assistant assistant;

public ChatController(Assistant assistant) {

this.assistant = assistant;

}

@MessageMapping("/chat")

@SendToUser("/queue/reply")

public String chat(@Payload String userMessage) {

return assistant.chat(userMessage);

}

}Building the Front-End client

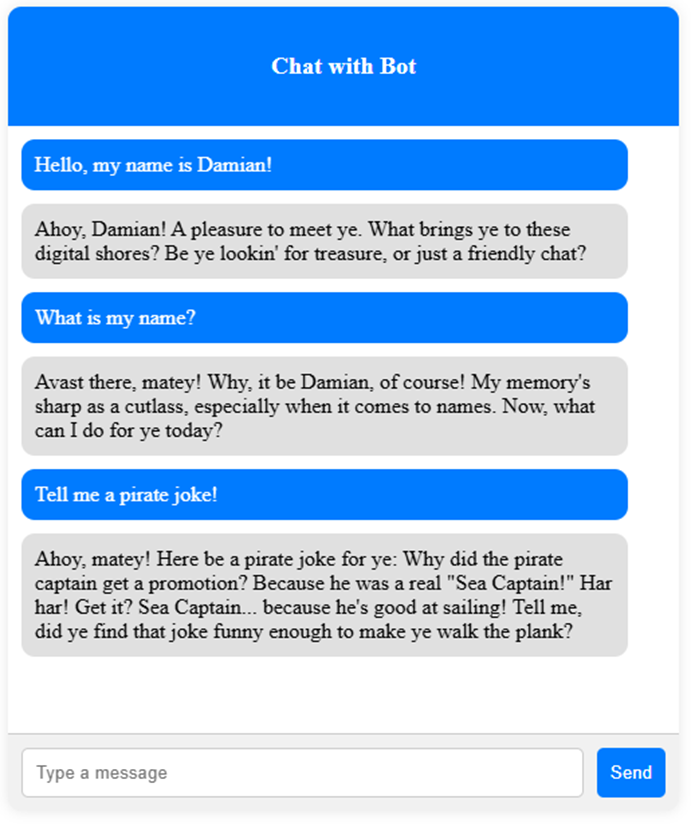

A simplefront-end client using HTML, CSS, and JavaScript will enable users to interact with the chat application in real-time. Using SockJS and STOMP, establish a WebSocket connection, subscribe to the reply channel, and create a responsive chat experience.

connect initializes the WebSocket connection to the server and subscribes to messages.

sendMessage retrieves user input, sends itto the server, and displays it in the chat.

displayMessage formats and appends messages in the chat body.

With these steps, users will enjoy a seamless, real-time interaction with the chatbot.

<html>

<head>

<title>Chat WebSocket</title>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<link rel="stylesheet" href="styles.css">

<script src="sockjs-0.3.4.js"></script>

<script src="stomp.js"></script>

<script type="text/javascript">

var stompClient = null;

function connect() {

var socket = new SockJS('http://127.0.0.1:8080/chat');

stompClient = Stomp.over(socket);

stompClient.connect({}, function (frame) {

console.log('Connected: ' + frame);

stompClient.subscribe('/user/queue/reply', function (messageOutput) {

displayMessage(messageOutput.body, "bot");

});

});

}

function sendMessage() {

const inputField = document.getElementById('user-input');

const userMessage = inputField.value;

if (userMessage.trim() === "") return;

displayMessage(userMessage, 'user');

stompClient.send('/app/chat', {}, userMessage);

inputField.value = "";

scrollChatToBottom();

}

function displayMessage(message, sender) {

const chatBody = document.getElementById('chat-body');

const messageElement = document.createElement('div');

messageElement.classList.add('message', sender);

messageElement.textContent = message;

chatBody.appendChild(messageElement);

}

function scrollChatToBottom() {

const chatBody = document.getElementById('chat-body');

chatBody.scrollTop = chatBody.scrollHeight;

}

function checkEnter(event) {

if (event.key === "Enter") {

sendMessage();

}

}

</script>

</head>

<body onload="connect()">

<div class="chat-box" id="chat-box">

<div class="chat-header">

<h4>Chat with Bot</h4>

</div>

<div class="chat-body" id="chat-body"></div>

<div class="chat-footer">

<input type="text" id="user-input" placeholder="Type a message" onkeypress="checkEnter(event)">

<button onclick="sendMessage()">Send</button>

</div>

</div>

</body>

</html>Testing the Application

To test your chat application, start the Spring Boot server, then open index.html to load the client. Once loaded, WebSocket should automatically establish the connection, allowing messages to flow between client and server.

Summary

This guide provided an overview of LangChain4j, covering essential features and setup steps for building intelligent, interactive Java applications. From dependency configuration to real-time WebSocket messaging, LangChain4j makes AI integration easier for Java developers. Explore more at the LangChain4j documentation to unlock its full potential and take your Java projects to the next level (https://docs.langchain4j.dev/).

Join Java Team

Isn't it great?